Year in Review: Lunar Energy on the progress of US home energy storage through ‘turbulent 2025’ and the way ahead for virtual power plants

In his 40 years leading McLeod Software, one of the nation’s largest providers of transportation management systems for truckers and 3PLs (third-party logistics providers), Tom McLeod has seen many a new technology product introduced with much hype and promise, only to fade in real-world practice and fail to mature into a productive application.

In his view, as new tech players have come and gone, the basic demand from shippers and trucking operators for technology has remained pretty much the same, straightforwardly simple and unchanged over time: “Find me a way to use computers and software to get more done in less time and [at a] lower cost,” he says.

“It’s been the same goal, from decades ago when we replaced typewriters, all the way to today finding ways to use artificial intelligence (AI) to automate more tasks, streamline processes, and make the human worker more efficient,” he adds. “Get more done in less time. Make people more productive.”

The difference between now and the pretenders of the past? McLeod and others believe that AI is the real thing and, as it continues to develop and mature, will be incorporated deeper into every transportation and logistics planning, execution, and supply chain process, fundamentally changing and forcing a reinvention of how shippers and logistics service providers operate and manage the supply chain function.

“But it is not a magic bullet you can easily switch on,” McLeod cautions. “While the capabilities look magical, at some level it takes time to train these models and get them using data properly and then come back with recommendations or actions that can be relied upon,” he adds.

One of the challenges is that so much supply chain data today remains highly unstructured—by one estimate, as much as 75%. Converting and consolidating myriad sources and formats of data, and ensuring it is clean, complete, and accurate remains perhaps the biggest challenge to accelerated AI adoption.

Often today when a broker is searching for a truck, entering an order, quoting a load, or pulling a status update, someone is interpreting that text or email, extracting information from the transportation management system (TMS), and creating a response to the customer, explains Doug Schrier, McLeod’s vice president of growth and special projects. “With AI, what we can do is interpret what the email is asking for, extract that, overlay the TMS information, and use AI to respond to the customer in an automated fashion,” he says.

To come up with a price quote using traditional methods might take three or four minutes, he’s observed. An AI-enabled process cuts that down to five seconds. Similarly, entering an order into a system might take four to five minutes. With AI interpreting the email string and other inputs, a response is produced in a minute or less. “So if you are doing [that task] hundreds of times a week, it makes a difference. What you want to do is get the human adding the value and [use AI] to get the mundane out of the workflow.”

Yet the growth of AI is happening across a technology landscape that remains fragmented, with some solutions that fit part of the problem, and others that overlap or conflict. Today it’s still a market where there is not one single tech provider that can be all things to all users.

In McLeod’s view, its job is to focus on the mission of providing a highly functional primary TMS platform—and then complement and enhance that with partners who provide a specialized piece of an ever-growing solution puzzle. “We currently have built, over the past three decades, 150 deep partnerships, which equates to about 250 integrations,” says Ahmed Ebrahim, McLeod’s vice president of strategic alliances. “Customers want us to focus on our core competencies and work with best-of-breed parties to give them better choices [and a deeper solution set] as their needs evolve,” he adds.

One example of such a best-of-breed partnership is McLeod’s arrangement with Qued, an AI-powered application developer that provides McLeod TMS clients with connectivity and process automation for every load appointment scheduling mode, whether through a portal, email, voice, or text.

Before Qued was integrated, there were about 18 steps a user had to complete to get an appointment back into the TMS, notes Tom Curee, Qued’s president. With Qued, those steps are reduced to virtually zero and require no human intervention.

As soon as a stop is entered into the TMS, it is immediately and automatically routed to Qued, which reaches out to the scheduling platform or location, secures the appointment, and returns an update into the TMS with the details. It eliminates manual appointment-making tasks like logging on and entering data into a portal, and rekeying or emailing, and it significantly enhances the value and efficiency of this particular workflow activity for McLeod users.

One of the effects of the three-year freight recession has been its impact on investment. Whereas in better times, logistics and trucking firms would focus on buying tech to reduce costs, enhance productivity, and improve customer service, the constant financial pressure has narrowed that focus.

“First and exclusively, it is now on ‘How do we create efficiency by replacing people and really bring cost levels down because rates are still extremely low and margins really tight,’” says Bart De Muynck, a former Gartner research analyst covering the visibility and supply chain tech space, and now principal at consulting firm Bart De Muynck LLC.

Most industry operators he’s spoken with have looked at AI. One example he cites as ripe for transformation is freight brokerages, “where you have rows and rows of people on the phone.” They are asking the question “Which of these processes or activities can we do with AI?”

Yet De Muynck points to one issue that is proving to be a roadblock to change and transformation. “For many of these companies, their foundational technology is still on older architectural platforms,’’ in some cases proprietary ones, he notes. “It’s hard to combine AI with those.” And because of years of low margins and cash flow restrictions, “they have not been able to replace their core ERP [enterprise resource planning system] or the TMS for that carrier or broker, so they are still running on very old tech.”

For those players, De Muynck says they will discover a disconcerting reality: the difficulty of trying to apply AI on a platform that is decades old. “That will yield some efficiencies, but those will be short term and limited in terms of replacing manual tasks,” he says.

The larger question, De Muynck says, is “How do you reinvent your company to become more successful? How do we create applications and processes that are based on the new architecture so there is a big [transformative] lift and shift [and so we can implement and deploy foundational pieces fairly quickly]? Then with those solutions build something with AI that is truly transformational and effective.” And, he adds, bring the workforce along successfully in the process.

“People have some things in their jobs they have to do 100 times a day,” often a menial or boring task, De Muynck adds. “AI can automate or streamline those tasks in such a way that it improves the employee’s work experience and job satisfaction, while driving efficiencies. [Rather than eliminate a position], brokers can redirect worker time to more higher-value, complex tasks that need human input, intuition, and leadership.”

“With logistics, you cannot take people completely out of the equation,” he emphasizes. “[The best AI solutions] will be a human paired up with an intelligent AI agent. It will be a combination of people [and their tribal knowledge and institutional experience] and technology,” he predicts.

Shippers, truckers, and 3PLs are experiencing an awakening around the possibilities of technologies today and what modern architecture, in-the-cloud platforms, and AI-powered agents can do, says Ann Marie Jonkman, vice president–industry advisory for software firm Blue Yonder. For many, the hardest decision is where to start. It can be overwhelming, particularly in a market environment shaped by chaos, uncertainty, and disruption, where surviving every week sometimes seems a challenge in itself.

“First understand and be clear about what you want to achieve and the problems you want to solve” with a tech strategy, she advises. “Pick two or three issues and develop clear, defined use cases for each. Look at the biggest disruptions—where are the leakages occurring and how do I start?”

Among the most frequently targeted areas of investment she sees are companies putting capital and resources into broad areas of automation, not just physical activity with robotics, but in business processes, workflows, and operations. It also is about being able to understand tradeoffs, getting ahead of and removing waste, and moving the organization from a reactionary posture to one that’s more proactive and informed, and can leverage what Jonkman calls “decision velocity.” That places a priority on not only connecting the silos, but also on incorporating clean, accurate, and actionable data into one command center or control tower. When built and deployed correctly, such central platforms can provide near-immediate visibility into supply chain health as well as more efficient and accurate management of the end-to-end process.

Those investments in supply chain orchestration not only accelerate and improve decision-making around stock levels, fulfillment, shipping choices, and overall network and partner performance, but also provide the ability to “respond to disruption and get a handle on the data to monitor and predict disruption,” Jonkman adds. It’s tying together the nodes and flows of the supply chain so “fulfillment has the order ready at the right place and the right time [with the right service]” to reduce detention and ensure customer expectations are met.

It is important for companies not to sit on the sidelines, she advises. Get into the technology transformation game in some form. “Just start somewhere,” even if it is a small project, learn and adapt, and then go from there. “It does not need to be perfect. Perfection can be the enemy of success.”

The speed of technology innovation always has been rapid, and the advent of AI and automation is accelerating that even further, observes Jason Brenner, senior vice president of digital portfolio at FedEx. “We see that as an opportunity, rather than a challenge.”

He believes one of the industry’s biggest challenges is turning innovation into adoption, “ensuring new capabilities integrate smoothly into existing operations and deliver value quickly.” Brenner adds that in his view, “innovation is healthy and pushes everyone forward.”

Execution at scale is where the rubber meets the road. “Delivering technology that works reliably across millions of shipments, geographies, and constantly changing conditions requires deep operational integration, massive data sets, and the ability to test solutions in multiple environments,” he says. “That’s where FedEx is uniquely positioned.”

Before the arrival of the newest forms of AI, “there were shipping tasks that had defied automation for decades,” notes Mark Albrecht, vice president of artificial intelligence for freight broker and 3PL C.H. Robinson. “Humans had to do this repetitive, time-consuming—I might even say mind-numbing—yet essential work.”

Application of early forms of AI, such as machine learning tools and algorithms, provided a hint of what was to come. CHR, which has one of the largest in-house IT development groups in the industry, has been using those for a decade.

Large language models and generative AI were the next big leap. “It’s the advent of agentic AI that opens up new possibilities and holds the greatest potential for transformation in the coming year,” Albrecht says, adding, “Agentic AI doesn’t just analyze or generate content; it acts autonomously to achieve goals like a human would. It can apply reasoning and make decisions.”

CHR has built and deployed more than 30 AI agents, Albrecht says. Collectively, they have performed millions of once-manual tasks—and generated significant benefits. “Take email pricing requests. We get over 10,000 of those a day, and people used to open each one, read it, get a quote from our dynamic pricing engine, and send that back to the customer,” he notes. “Now a proprietary AI agent does that—in 32 seconds.”

Another example is load tenders. “It used to take our people upwards of four hours to get to those through a long queue of emails,” he recalls. That work is now done by an AI agent that reads the email subject line, body, and attachments; collects other needed information; and “turns it into an order in our system in 90 seconds,” Albrecht says. He adds that if the email is for 20 orders, “the agent can handle them simultaneously in the same 90 seconds,” whereas a human would have to handle them sequentially.

Time is money for the shipper at every step of the logistics process. So the faster a rate quote is provided, order created, carrier selected, and load appointment scheduled, the greater the benefits to the shipper. “It’s all about speed to market, which whether a retailer or manufacturer, often translates into if you make the sale or keep an assembly line rolling.”

Strip away all the hype, and the one tech deliverable that remains table stakes for all logistics providers and their customers are platforms that provide a timely and accurate view into where goods are and with whom, and when they will get to their destination. “First and foremost is real-time visibility that enables customer access to the movement of their product across the supply chain,” says Penske Executive Vice President Mike Medeiros. “Then, getting further upstream and allowing them to be more agile and responsive to disruptions.”

As for AI, “it’s not about replacing [workers]; it’s about pointing them in the right direction and helping [them] get more done in the same amount of time, with a higher level of service and enabling a more satisfying work experience. It’s human capital complemented by AI-powered agents as virtual assistants. We’ve already [started] down that path.”

Argonne National Laboratory has entered into a partnership with RIKEN, Fujitsu Limited and NVIDIA to advance artificial intelligence and high performance computing to accelerate scientific discovery. The agreement, based on a memorandum of understanding signed January 27, will aligns with DOE’s Genesis Mission, a national initiative to use AI ....

The post Argonne Partners with RIKEN, Fujitsu and NVIDIA on AI for Science and HPC appeared first on Inside HPC & AI News | High-Performance Computing & Artificial Intelligence.

Our special guest today is Paul Bloch, President and Co-founder of DDN, the high performance storage and intelligent data platform company.

AI runs on massive amounts of fast and reliable data, which makes topics related to ....

The post @HPCpodcast: DDN’s Paul Bloch on High Performance Storage Strategies for AI Data Centers appeared first on Inside HPC & AI News | High-Performance Computing & Artificial Intelligence.

Milpitas, CA, Jan. 20, 2026, VDURA today announced the release of the Flash Volatility Index and Storage Economics Optimizer Tool, an updated quarterly resource designed to help enterprises, infrastructure operators, and partners quantify flash pricing risk and evaluate storage architecture economics under changing market conditions. Enterprise flash pricing has entered a period of sustained volatility driven by hyperscaler […]

The post VDURA Introduces Flash Volatility Index and Storage Economics Tool appeared first on Inside HPC & AI News | High-Performance Computing & Artificial Intelligence.

Good Martin Luther King Day (US) to you! Chips in their many forms and the manufacturing of them dominated the HPC-AI industry ....

The post HPC News Bytes 20260119: Cerebras Breakout, Taiwan’s Chips-Tariff Deal, Apple-Intel Foundry Reunion, Hit Google AI Documentary appeared first on Inside HPC & AI News | High-Performance Computing & Artificial Intelligence.

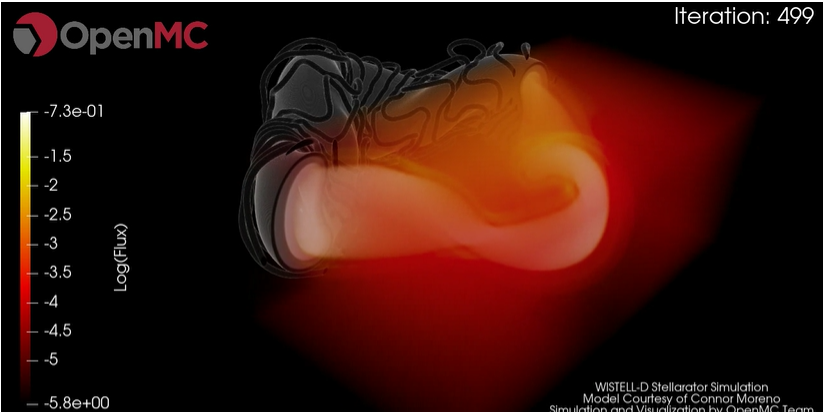

The award winning OpenMC software package is helping researchers at Argonne National Laboratory and the Massachusetts Institute of Technology develop next-g nuclear and fusion ....

The post Argonne, MIT Using Open-Source Code for Nuclear and Fusion Energy Research appeared first on Inside HPC & AI News | High-Performance Computing & Artificial Intelligence.

German utility RWE implemented the first known virtual power plant (VPP) in 2008, aggregating nine small hydroelectric plants for a total capacity of 8.6 megawatts. In general, a VPP pulls together many small components—like rooftop solar, home batteries, and smart thermostats—into a single coordinated power system. The system responds to grid needs on demand, whether by making stored energy available or reducing energy consumption by smart devices during peak hours.

VPPs had a moment in the mid-2010s, but market conditions and the technology weren’t quite aligned for them to take off. Electricity demand wasn’t high enough, and existing sources—coal, natural gas, nuclear, and renewables—met demand and kept prices stable. Additionally, despite the costs of hardware like solar panels and batteries falling, the software to link and manage these resources lagged behind, and there wasn’t much financial incentive for it to catch up.

But times have changed, and less than a decade later, the stars are aligning in VPPs’ favor. They’re hitting a deployment inflection point, and they could play a significant role in meeting energy demand over the next 5 to 10 years in a way that’s faster, cheaper, and greener than other solutions.

Electricity demand in the United States is expected to grow 25 percent by 2030 due to data center buildouts, electric vehicles, manufacturing, and electrification, according to estimates from technology consultant ICF International.

At the same time, a host of bottlenecks are making it hard to expand the grid. There’s a backlog of at least three to five years on new gas turbines. Hundreds of gigawatts of renewables are languishing in interconnection queues, where there’s also a backlog of up to five years. On the delivery side, there’s a transformer shortage that could take up to five years to resolve, and a dearth of transmission lines. This all adds up to a long, slow process to add generation and delivery capacity, and it’s not getting faster anytime soon.

“Fueling electric vehicles, electric heat, and data centers solely from traditional approaches would increase rates that are already too high,” says Brad Heavner, the executive director of the California Solar & Storage Association.

Enter the vast network of resources that are already active and grid-connected—and the perfect storm of factors that make now the time to scale them. Adel Nasiri, a professor of electrical engineering at the University of South Carolina, says variability of loads from data centers and electric vehicles has increased, as has deployment of grid-scale batteries and storage. There are more distributed energy resources available than there were before, and the last decade has seen advances in grid management using autonomous controls.

At the heart of it all, though, is the technology that stores and dispatches electricity on demand: batteries.

Over the past 10 years, battery prices have plummeted: The average lithium-ion battery pack price fell from US $715 per kilowatt-hour in 2014 to $115 per kWh in 2024. Their energy density has simultaneously increased thanks to a combination of materials advancements, design optimization of battery cells, and improvements in the packaging of battery systems, says Oliver Gross, a senior fellow in energy storage and electrification at automaker Stellantis.

The biggest improvements have come in batteries’ cathodes and electrolytes, with nickel-based cathodes starting to be used about a decade ago. “In many ways, the cathode limits the capacity of the battery, so by unlocking higher-capacity cathode materials, we have been able to take advantage of the intrinsic higher capacity of anode materials,” says Greg Less, the director of the University of Michigan’s Battery Lab.

Increasing the percentage of nickel in the cathode (relative to other metals) increases energy density because nickel can hold more lithium per gram than materials like cobalt or manganese, exchanging more electrons and participating more fully in the redox reactions that move lithium in and out of the battery. The same goes for silicon, which has become more common in anodes. However, there’s a trade-off: These materials cause more structural instability during the battery’s cycling.

The anode and cathode are surrounded by a liquid electrolyte. The electrolyte has to be electrically and chemically stable when exposed to the anode and cathode in order to avoid safety hazards like thermal runaway or fires and rapid degradation. “The real revolution has been the breakthroughs in chemistry to make the electrolyte stable against more reactive cathode materials to get the energy density up,” says Gross. Chemical compound additives—many of them based on sulfur and boron chemistry—for the electrolyte help create stable layers between it and the anode and cathode materials. “They form these protective layers very early in the manufacturing process so that the cell stays stable throughout its life.”

These advances have primarily been made on electric vehicle batteries, which differ from grid-scale batteries in that EVs are often parked or idle, while grid batteries are constantly connected and need to be ready to transfer energy. However, Gross says, “the same approaches that got our energy density higher in EVs can also be applied to optimizing grid storage. The materials might be a little different, but the methodologies are the same.” The most popular cathode material for grid storage batteries at the moment is lithium iron phosphate, or LFP.

Thanks to these technical gains and dropping costs, a domino effect has been set in motion: The more batteries deployed, the cheaper they become, which fuels more deployment and creates positive feedback loops.

Regions that have experienced frequent blackouts—like parts of Texas, California, and Puerto Rico—are a prime market for home batteries. Texas-based Base Power, which raised $1 billion in Series C funding in October, installs batteries at customers’ homes and becomes their retail power provider, charging the batteries when excess wind or solar production makes prices cheap, and then selling that energy back to the grid when demand spikes.

There is, however, still room for improvement. For wider adoption, says Nasiri, “the installed battery cost needs to get under $100 per kWh for large VPP deployments.”

The software infrastructure that once limited VPPs to pilot projects has matured into a robust digital backbone, making it feasible to operate VPPs at grid scale. Advances in AI are key: Many VPPs now use machine-learning algorithms to predict load flexibility, solar and battery output, customer behavior, and grid stress events. This improves the dependability of a VPP’s capacity, which was historically a major concern for grid operators.

While solar panels have advanced, VPPs have been held back by a lack of similar advancement in the needed software until recently.Sunrun

While solar panels have advanced, VPPs have been held back by a lack of similar advancement in the needed software until recently.Sunrun

Cybersecurity and interoperability standards are still evolving. Interconnection processes and data visibility in many areas aren’t consistent, making it hard to monitor and coordinate distributed resources effectively. In short, while the technology and economics for VPPs are firmly in place, there’s work yet to be done aligning regulation, infrastructure, and market design.

On top of technical and cost constraints, VPPs have long been held back by regulations that prevented them from participating in energy markets like traditional generators. SolarEdge recently announced enrollment of more than 500 megawatt-hours of residential battery storage in its VPP programs. Tamara Sinensky, the company’s senior manager of grid services, says the biggest hurdle to achieving this milestone wasn’t technical—it was regulatory program design.

California’s Demand Side Grid Support (DSGS) program, launched in mid-2022, pays homes, businesses, and VPPs to reduce electricity use or discharge energy during grid emergencies. “We’ve seen a massive increase in our VPP enrollments primarily driven by the DSGS program,” says Sinensky. Similarly, Sunrun’s Northern California VPP delivered 535 megawatts of power from home-based batteries to the grid in July, and saw a 400 percent increase in VPP participation from last year.

FERC Order 2222, issued in 2020, requires regional grid operators to allow VPPs to sell power, reduce load, or provide grid services directly to wholesale market operators, and get paid the same market price as a traditional power plant for those services. However, many states and grid regions don’t yet have a process in place to comply with the FERC order. And because utilities profit from grid expansion and not VPP deployment, they’re not incentivized to integrate VPPs into their operations. Utilities “view customer batteries as competition,” says Heavner.

According to Nasiri, VPPs would have a meaningful impact on the grid if they achieve a penetration of 2 percent of the market’s peak power. “Larger penetration of up to 5 percent for up to 4 hours is required to have a meaningful capacity impact for grid planning and operation,” he says.

In other words, VPP operators have their work cut out for them in continuing to unlock the flexible capacity in homes, businesses, and EVs. Additional technical and policy advances could move VPPs from a niche reliability tool to a key power source and grid stabilizer for the energy tumult ahead.